2023

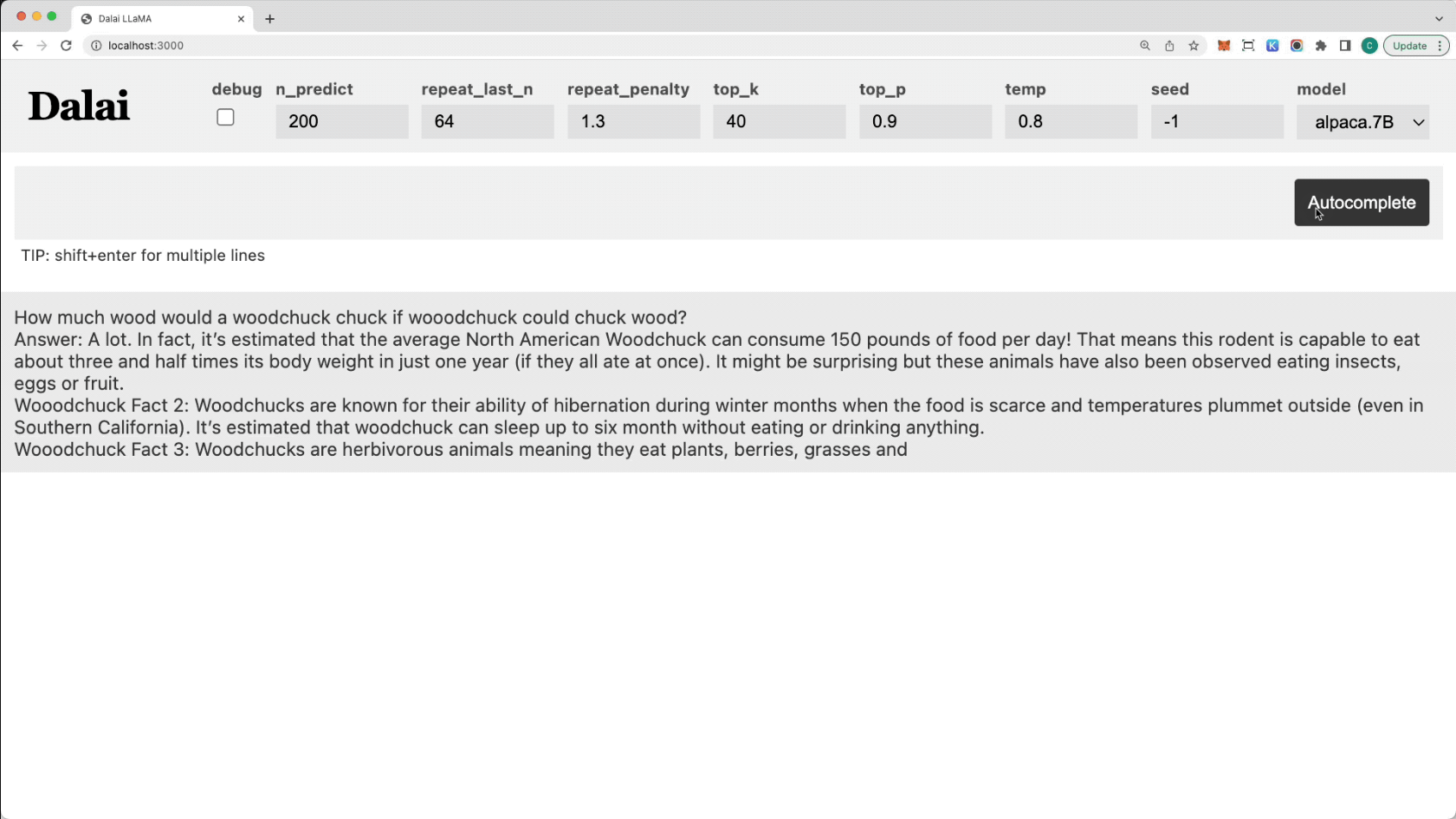

A couple of years ago when the hype for LLMs was still new, I decided that I wanted to try running an LLM locally on my machine. At the time, I remember that the only models available seemed to be Meta’s Llama models, and some fine-tuned variations. I used the Dalai web interface to run LLaMA-7b on my computer.

At the time I noticed that the performance of the model wasn’t great compared to things like GPT-3.5. It was fun to play around with for a bit, but it really wasn’t very useful, though pretty cool to have a computer able to generate intelligible human language.

2025

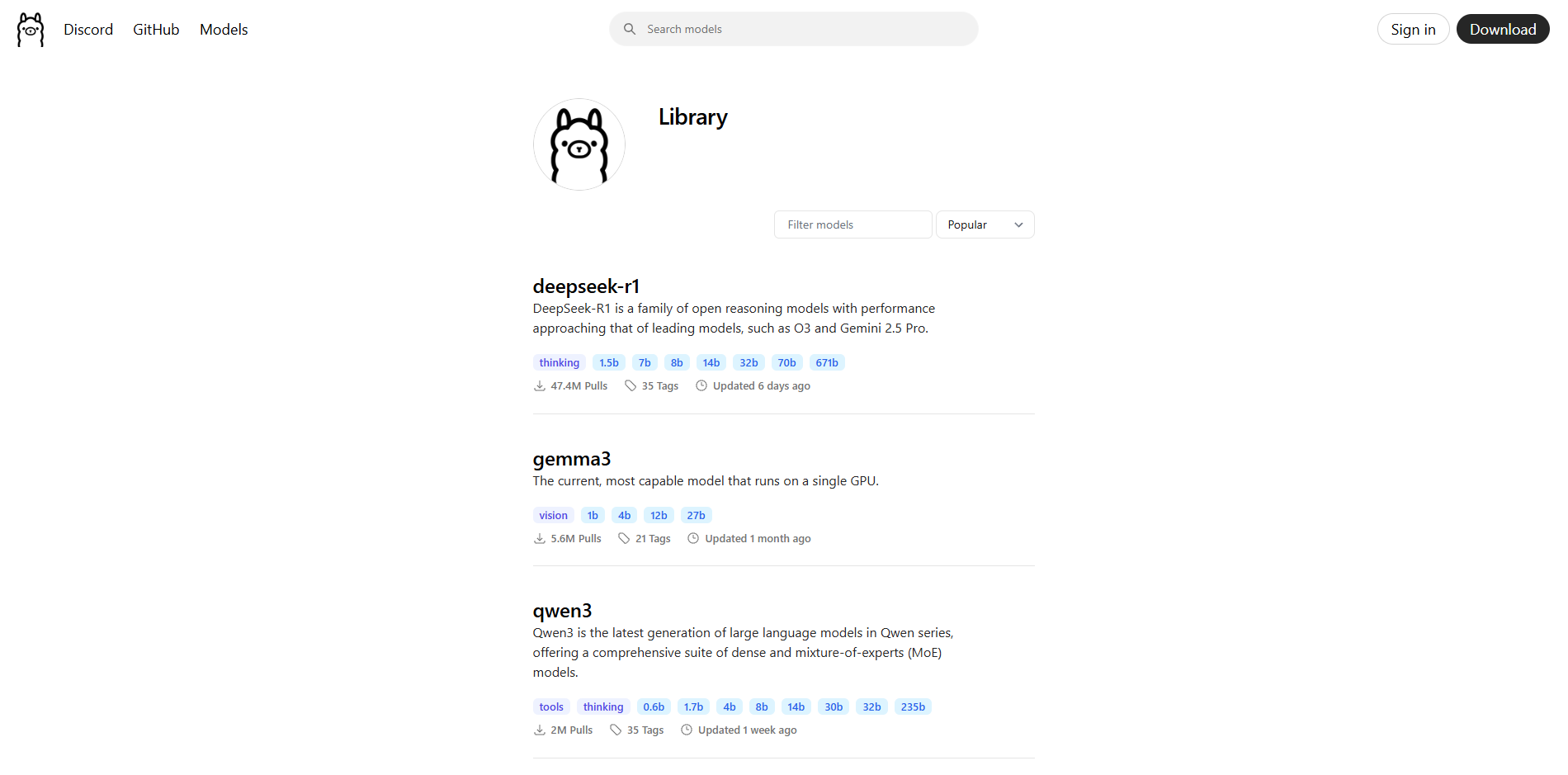

The year is now 2025, and LLMs have massively increased in popularity. There are many models available from large AI companies such as OpenAI’s ChatGPT, Anthropic’s Claude, Deepseek, and Google’s Gemini. But more interestingly, the ‘Local Llama’ community has exploded as well. The Dalai interface is outdated, and there are an enormous number of models to choose from. Ollama seems to be the dominant way to download and manage models, so I gave it a try.

All you need to do on Windows is download the installer, and type ollama run <model_name>. You can visit the Ollama library to see a list of models available to download. Google had just released Gemma 3, ‘The current, most capable model that runs on a single GPU’. I downloaded gemma3:4b and gave it a go in my terminal.

I tried some of the code it generated in some simple examples and it did work. Llama from 2023 never could have done this. I played around with other things such as general knowledge and was pleasantly surprised by how capable it was, especially for its small memory requirement. However, running it in the terminal was pretty nasty, so I wanted to see if there were any interfaces available to use.

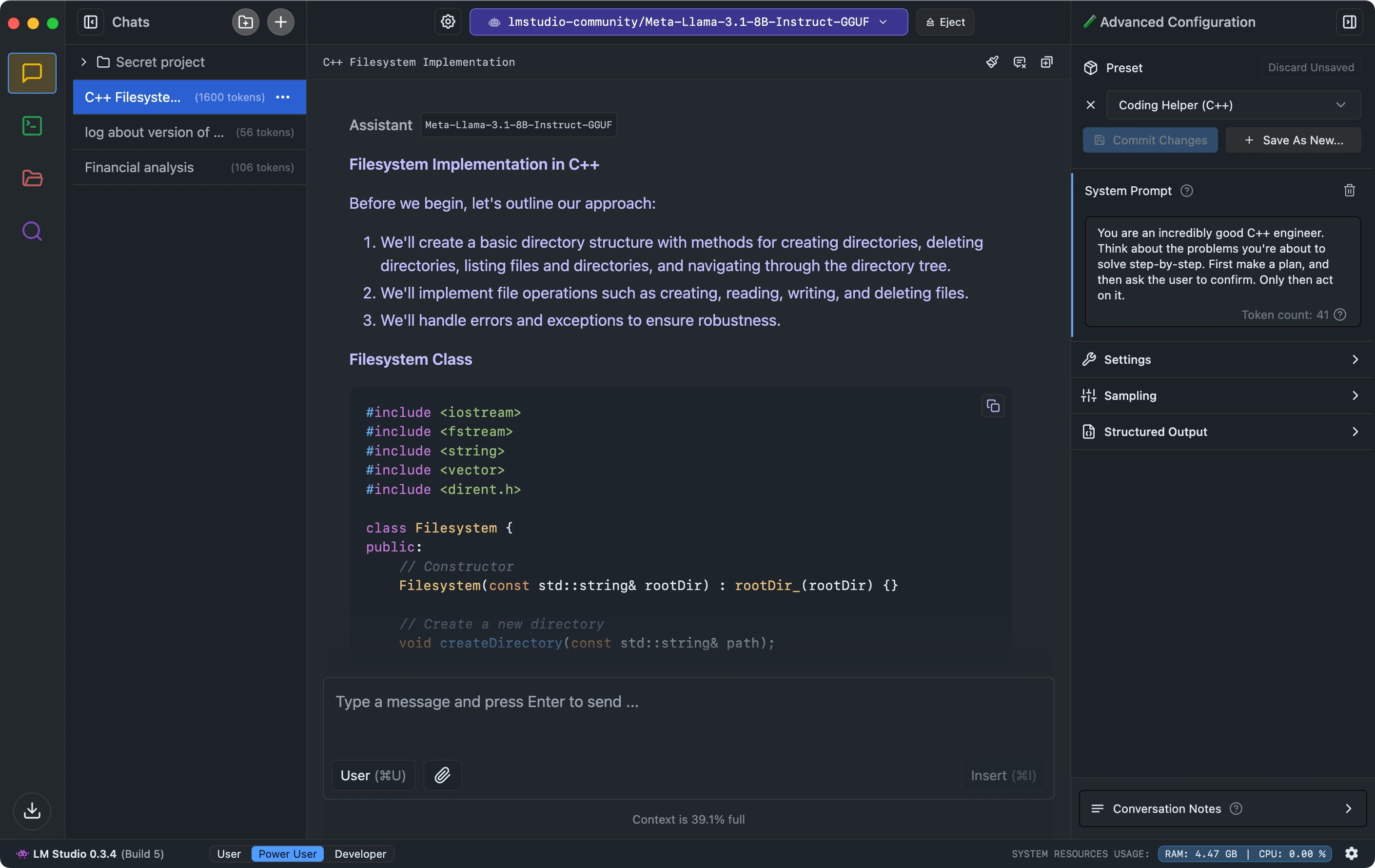

I found that there were loads of interfaces. So many that I couldn’t possibly try them all, and I was honestly getting analysis paralysis. I decided to go with LM Studio, one of the most popular interfaces recommended by many.

I found the interface to be very polished and nice. However, it wasn’t able to use my Ollama models, or at least I couldn’t find the option to. Instead, Ollama recommended that I download Qwen 2.5-coder, a model similar in size to gemma:4b. I found that they functioned pretty similarly from my limited testing, they were both really good. Another problem with LM Studio is that it’s closed source, which is a bit problematic when one of the main reasons for running local LLMs is privacy.

The next interface that I tried was Jan.

Personally I didn’t like Jan, and this dislike started from the installation process. However they decided to implement the installer, it caused extremely old Windows XP style dialogues to appear. I did actually quite like the appearance of the app, though it looked like it was styled for MacOS. What really made me dislike it though was that it felt sluggish. I’m pretty certain it’s a web app, and most of these clients probably are, but at least with something like LM Studio, I couldn’t feel it.

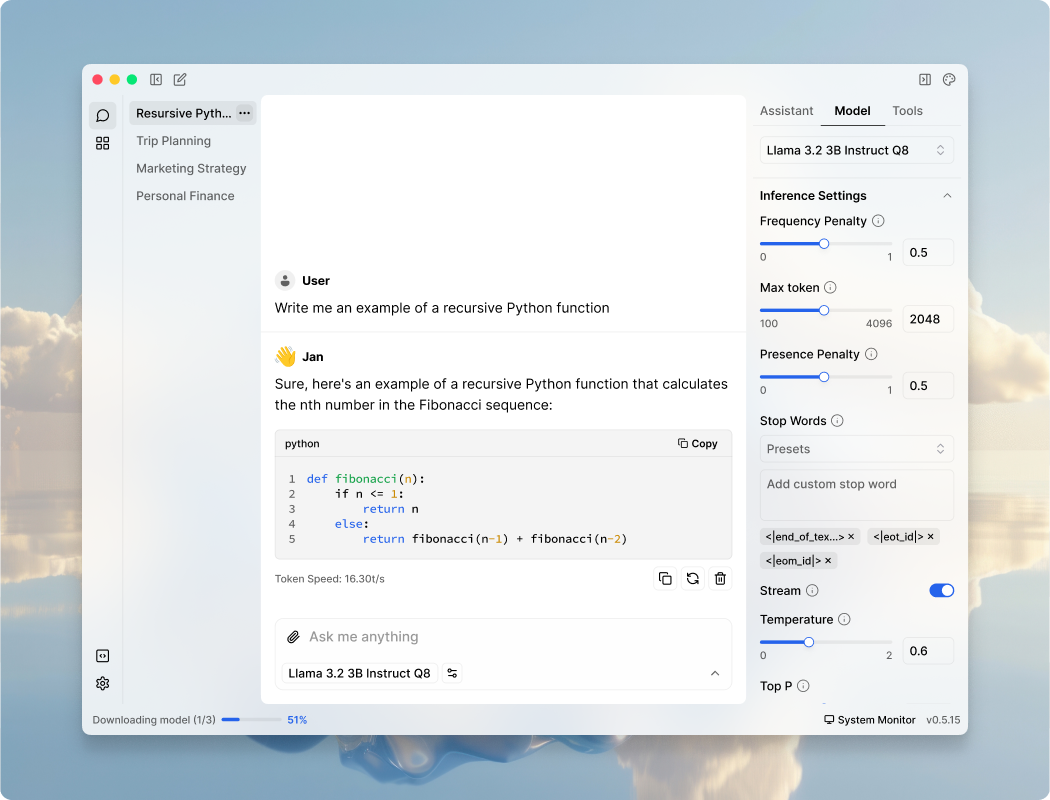

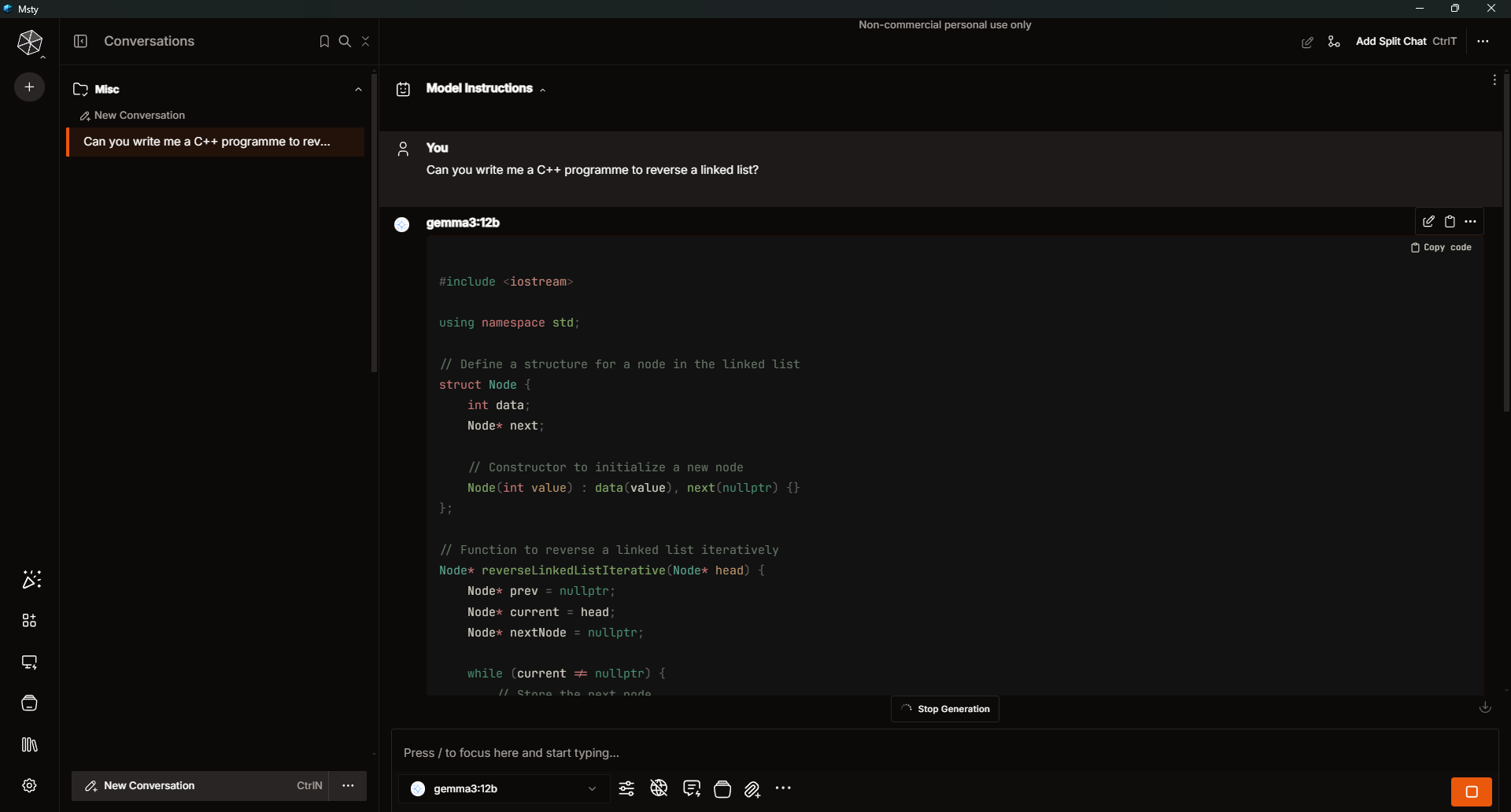

The next interface that I tried was Msty (pronounced misty?).

I ended up really liking Msty. Unfortunately it’s closed source, but the UI felt fairly polished, it was able to use Ollama models, and it had many features. One I really liked was the ability to let the models use the internet. This is still the interface that I use today.

Conclusion

Local LLMs have really exploded in the last couple years. There are loads to try, and Ollama makes trying them really easy. There are also way too many interfaces to choose from. Personally, I use Gemma 3 4B with Msty.